My new blog site at blog.dhananjaya.me

Inline Functions In C

Hierarchical Clustering

Hierarchical clustering is a fully automated machine learning algorithm where you don’t have to specify the number of clusters to be found as in k-nearest neighbors algorithm.

Definition:

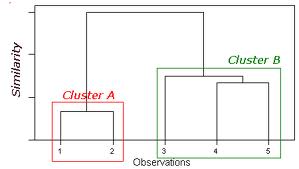

Recursively splitting clusters produces a hierarchy that can be represented as a dendogram.

After clusterization the resultant model can used to predict new data instances similar to KNN.

Learning Algorithms: Agglomerative clustering

Bottom-up algorithms treat each instance as a singleton cluster at the outset and then successively merge (or agglomerate) pairs of clusters until all clusters have been merged into a single cluster that contains all instances.

(contrary to agglomerativ clustering (bottom-up) there exsist top down clustering algorithms that proceeds by splitting clusters recursively until individual documents are reached)

algorithms:

- Requires a distance measure

- Start assuming each instance to be a cluster

- Find the two closest cluster and merge them

- Continue merging until one cluster is left

- Record the merges (they are the clusters at each level of the dendogram)

Distance Measuring

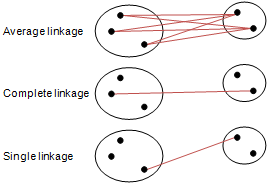

- Single linkage (minimum distance between two clusters)

- Complete linkage (maximum distance between two clusters)

- Centroid linkage (calculate centroid for each cluster and measure between centroids)

- Average linkage (average distance between each pair of members of the two clusters)

- Ward’s clustering method (wikipedia article)

C++ : extracting a number from a string

In C language we have atoi and its relatives to get a number out from a string but how do we do the same thing in c++ without using C stdlib functions.

Easy, we can use istringstream class.

here’s a example

string s = "1 2 3";

istringstream iss(s);

int n;

while (iss >> n)

{

cout << "# " << n << endl;

}

you have to include the sstream header file to use the istringstream class.

File Stream Objects in C++

If we want to open a file for reading or writing in c++, we do so by opening a file stream, a file stream object have a open(“filename.ext”) method that we can use to specify which file to be opened.

#include <fstream>

// in main function

using namespace std;

ifstream in_file;

in_file.open("file.name");

// or

ifstream in_file("file.name"); // in this case constructor automatically calls the open method

we can use overloaded operators to read and write to; just like we do with cin,cout

#include <iostream>

#include <fstream> // we can user ifstream to only get input file stream and ofstream to only get output file strem

int main()

{

using namespace std;

ifstream in_file("readme.txt");

string buffer_str;

in_file >> buffer_str; // read a word from in_file

cout << buffer_str << endl; // print it to stdout /* we also can read until the end of file by */

while(infile >> buffer_str)

cout << buffer_str << endl;

/* or we can use infile.eof() */

while(! infile.eof()) {

in_file >> s;

cout << s;

}

we can user .close() method to close the file and release the resources.

#include <iostream>

#include <fstream> // we can user ifstream to only get input file stream and ofstream to only get output file stream

int main()

{

using namespace std;

ifstream in_file("readme.txt");

string buffer_str;

while(infile >> buffer_str)

cout << buffer_str << endl;

/* and release the file using .close() */

in_file.close();

}

There might be cases where the file open might fail to indicate that the fail bit is set in the file stream object after the open method call, we can use if (in_file) // file open success to find whether the open succeed or not.

After opening a fie with a file stream object that file stream object remain associate with that file, we have to close the file in-order to open a another file with that stream object. If we try to use .open in a file stream object where is already have a open file then the open method will fail.

And most importantly if the file object got destroyed probably by going out of scope or by delete the associated file will be immediately closed automatically.

And how do we open a file for writing or appending?

Behold, just like in C language C++ also have construct to enable all kinds of possibilities to select which mode the file is to be opened.

in -- open for reading

out -- open for writing and if file exist it will be truncated; use with ofstream or fstream

in -- open for reading; use with ifstream or fstream

app -- open for appending and if file exist the data will remain

ate -- seek to end automatically after opening ; use with other modes

trunc -- truncate the file

binary -- binary mode IO operations rather than text mode IO ;; use with other modes

// examples

ofstream out("file1"); // out and trunc are implicit

ofstream out2("file1", ofstream::out); // trunc is implicit

ofstream out3("file1", ofstream::out | ofstream::trunc); // multiple modes are selected with bit-wise or operator

For more: http://en.cppreference.com/w/cpp/io

Performence with highlevel languages

Today when I’m looking through Python-Ideas mailing list, I come across this https://speakerdeck.com/alex/why-python-ruby-and-javascript-are-slow presentation that talk about the reasons behind the slowness of high level languages such as python and how even languages like C can be slow due to design defects. And in the same mailing list people are saying how the author hasn’t wrote idiomatic python ( pythonic ) code. However the presentation have lot to teach how one should think about performance when using a very high level language like python.

Even though we don’t allocate memory as we do in C when we are using the language the language is allocating for us. As everybody knows memory allocation is damn slow to start with so in my personal opinion we should try to use the language as such the unnecessary memory allocations are reduced. As a believer of higher level constructs can deliver better performance I think that we should look deeply into the features to know how they are really working before using it blindly.

For example we can use iterator in python so that the allocation is not done until it need to done, so the interpreter can optimize the code to run faster.

Basic Annotations for .net code first design

Code First modeling in Entity Framework workflow allows you to use your own domain classes to represent the database models. Code first modeling relies on a programming pattern known as conversion over configurations, what that mean is that there is a default configuration that is followed as convention so you don’t have to configure everything your self most of it is already there. But if you want more you certainly can change the default configurations.

This changing of the conversion on code first data modeling is done by using data annotations. In this article we are going to use data annotations found in System.ComponentModel.DataAnnotations namespace.

Lets take the example

public class Blog

{

public int Id { get; set; }

public string Title { get; set; }

public string BloggerName { get; set;}

public virtual ICollection<Post> Posts { get; set; }

}

public class Post

{

public int Id { get; set; }

public string Title { get; set; }

public DateTime DateCreated { get; set; }

public string Content { get; set; }

public int BlogId { get; set; }

public ICollection<Comment> Comments { get; set; }

}

The Id property name in Blog model tells the EF that it is a Id so EF make it the primary key in the table, you can also use names like BlogId, EF is smart enough to know that when it sees the BlogId field it a Id field so it must be a primary key, this is the convension, but if you want to use something else (PrimaryTrackingKey in this case) for the id field you have to tell the EF that this is the primary key.

public class Blog

{

[Key]

public int PrimaryTrackingKey { get; set; }

public string Title { get; set; }

public string BloggerName { get; set;}

public virtual ICollection Posts { get; set; }

}

How we do it is, we place a data annotations just above the property. Now EF knows that PrimaryTrackingKey is the primary key in the table. And this will make the data base column a primary key, int, not null.

Required

Required will tell the EF that this field/column is required its not null-able. And in MVC this will automatically generate a client side validation for that input box.

MaxLength and MinLength

[MaxLength(10),MinLength(5)]

public string Name { get; set; }

What this will do behined the scene is make a nvarchar(10) field and for the MinLength it’ll add validation code.

That’s not all it have you can specify a custom validation or rather validation failed message like,

[MaxLength(10, ErrorMessage="Name cannot exceed 10 characters"),MinLength(5)]

public string Name { get; set; }

NotMapped

[NotMapped]

public string BlogCode

{

get

{

return FirstName + " " + LastName;

}

}

Some times your model may have properties that have nothing to do with storing in the database, it only calculated on the fly. For such instances NotMapped annotation come to rescue. This will tell the EF that this property doesn’t go into the database.

What if you want to use a user defined type (class) as a datatype in you model. With EF you can do that too. What you have to do is mark that use defined datatype class as a ComplexType and you can use that type as a usual type in you database.

Most of the times EF will take care of forign key relationships by conversions, but when you breaking the naming conversions you can use the ForeignKey(“for_key”) annotation to help EF.

RESTful API

REST in RESTful API means Representational State Transfer, that means only the URL/URI s in the REST API can change the state of the server. The RESTful API will not use sessions (server side) to identity the browser/device like normal websites does. Each request you make to a server contains all the information (state) it needs for your server to fulfill the request.

A RESTful API means you have unique URLs to uniquely represent entities, and there is no verb/action on the URL/URI. Your URLs should only represent the entities, URLs like /Account/Create or /Account/88773p/Update are not RESTful. A URL is only allowed to represent state of an entity, like the URL /Account/88773p represents the state of account names 88773p, and allow GET, POST, PUT, DELETE on that very URL to perform CRUD operations. Verbs/Actions that are going to perform on that URL is decided by the use of HTTP methods like GET, DELETE etc.

reference:

http://tomayko.com/writings/rest-to-my-wife

http://www.codeproject.com/Articles/233572/Build-truly-RESTful-API-and-website-using-same-ASP

http://www.websanova.com/tutorials/web-services/how-to-design-a-rest-api-and-why-you-should

Threading in C#

Found a awesome c# threading tutorial on http://www.albahari.com/threading/

How to zip and unzip files from command line | windows

zip -b . stuff *

zip is the command

-b . here -b flag is used to indicate where the zip file is created so -b . means create it in current directory

then stuff is the file name of the zip file that we are creating, the final name will be stuff.zip

the * is the file to be zipped [ * means all the files]

say this is our directory structure and we need to zip the directory called css to css.zip

├───css

└───img

then our command is

zip -b . css css

now our file structure is

│ css.zip

│

├───css

└───img

to unzip we just say unzip css.zip